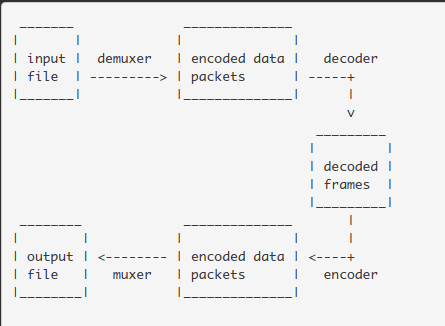

You can see that this works by starting up the capture program from my previous post and piping the raw video output to avconv where it specifies the location of the viewing instance.

Where the address after udp:// is the one that you need to adapt. This has the source code for everything that is discussed from here on down. Getting Startedĭownload the source code for my boneCV repository. UDP Multicasting allows you to have many clients attach to the same video stream, which is really useful if you want to have the viewer instigate the connection and you wish to have many viewers. The downside of unicasting is that the video transmitter needs to know the destination address of the receiver – kind of the opposite of what you might expect! UDP unicasting is very similar to the RTP stream from before except there is no need to distribute a configuration file to the client via RTP or RTSP. This post looks at how you can stream video using UDP unicasting and multicasting. I also just posted on how to stream video data using RTP. The previous post is here and the final instructional page is here. In this post I am going to look at how you can begin streaming data using the camera and code that I had adapted previously. In a previous post, I described how you could set up the Beaglebone Black to capture video and process video using OpenCV with the Logitech C920 camera.

0 kommentar(er)

0 kommentar(er)